April 9, 2105

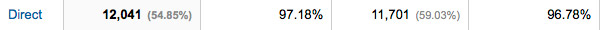

When I evaluate a website with a client, we look at the stats in Google Analytics for a snapshot of traffic patterns and traffic sources. Recently, a website owner was excited to see that her website had 21,953 visits for the past 30 days, however, upon further digging, I noticed that 12,041 of these visits where from direct traffic. Given the circumstances and the 96.78% bounce rate, this seemed odd, so we took it a step further and looked at the city source for these visits. It turned out a large percentage of the visits were from a select few cities. Hmm, that’s out of character for a website that servers the entire US.

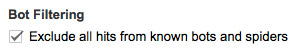

The next step was to add coding to the website to track headers and IP addresses. And what did we find? Bots! Robots were hitting the website and causing the bounce rate to accelerate! Yikes! Next, we looked for a fix… Under “View Settings,” Google Analytics has “Bot Filtering” with the option to “Exclude all hits from known bots and spiders,” so we turned that ON. Then, we waited for a few days to see if anything changed.

Meanwhile, you might be wondering why this matters? Well, some bots are useful and help your website get found, while others are detrimental. At the very least, these bots are using up your bandwidth and possibly slowing down your web server. A more critical issue is if you have a website that offers paid advertising. A high bounce rate will scare away your ad customers. Why would they want to pay for ads on a website when 97% of the users leave without interacting with the content on the page? Someone may be purposely targeting a website, to increase the bounce rate, so their website with similar content looks better in comparison.

After reviewing the data collected over the next few days, I could see that one robot in particular was targeting a specific section of the website and wondered if someone was scraping data from this website to use on their website. Hmm, what to do about that? Remember that Google’s setting is for known bots and this bot was running under the radar. We could block the IP, but they could use another one, and that becomes an endless game of cat and mouse. We could program pages to only accept users that are referred by other pages of the same site, but that would kill the search engine traffic. How about sending the robot to a blank page before it get’s a chance to read the content? I know the answer will appear as soon as I stop thinking so hard about it!

Anyway, the next time you review your traffic stats, take a closer look at what’s really going on behind the scenes.